How we improved our extension's tests

TL;DR Mocking the outside world, in our case the websites we validate the extension against, allowed us to speed up and strengthen our integration tests.

Where were we

The main product we ship is our web extension, which provides extra services on e-commerce websites.

The first iteration of the integration tests focused on automating our manual test scenarios. The process combined the difficulty of manual tests with the complexity of browser automation tools.

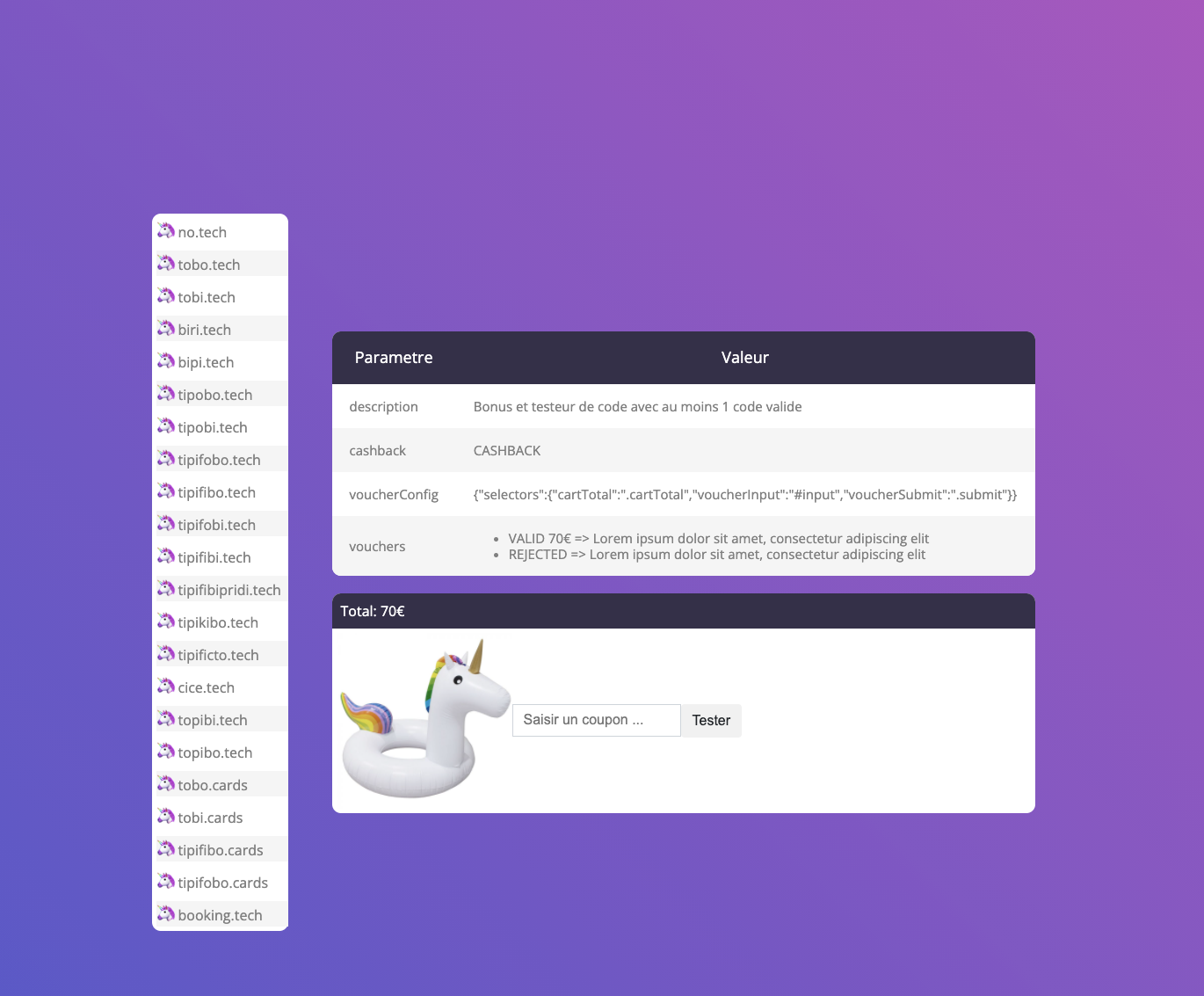

We'll focus on a single test scenario for the rest of this article: The extension should automatically test multiple vouchers - including a valid one - then apply the best one to the cart.

A tedious process

To test a behavior, we first had to find a website that allowed it, in our case: a website with an article which price could get reduced thanks to a voucher. Once those requirements were satisfied, we could start and work on the automation part.

As we didn't control the website, we had to automate the buying process and the user interactions with the extension. The latter could be reused across many scenarios as long as our extension didn't change. But the former had to change for every website and its updates.

Fragile and not so strong tests

A strong dependency on an external resource means that our tests weren't reproducible. A single selector change on the website or a voucher expiry would cause the test to fail.

This issue led us to use a record and replay proxy to ensure that the test scenario would always work with the same version of the website and matching data from our infrastructure.

This process would only validate a new extension version as if it was running in the past when we recorded the test.

A breaking change between our infrastructure and the extension could go unnoticed. We couldn't trust the results as much as we wanted, and re-recording a test was more tedious than a manual validation (as it was essentially a manual validation with extra steps to automate the browser on the website).

Manual tests then became the only trusted validation process, with evergrowing scenarios and wasted time. Both the feedback loop for the developer and our time to delivery was way too long.

The path we chose

To restore the trust in our tests, we chose to remove the record and replay part to work with live data. We built a collection of fake e-commerce websites exposing behavior we needed to test.

Mock the moving parts

The main pain point with the previous setup was the dependency on external websites. We overcame it by implementing a static website for each behavior our tests required.

The website above will always grant a discount when we use the VALID voucher. Any test manual or automated could use it and be more straightforward and faster.

As a result, all the website automation is written once and doesn't need to change for unknown reasons. If we were to change the behavior or structure of a website, we'd know of to update the

Validate against real data

With the introduction of fully controlled websites, we gain the ability to serve live data from our infrastructure to the extension, as we can guarantee the voucher we provide to work on a particular shop at any given time.

The extension can now, during its validation, contact our maintained websites and our infrastructure for real. The need for a proxy is gone and, we can spot incompatibility between the extension and the rest of our infrastructure reliably.

The result

The following video is a demonstration of a scenario execution on a laptop, first the extension is built then webdriver run the steps in a browser.